For Strong Structured Concurrency

or, How to Avoid Lifetime Footguns in std::execution

It is possible that the law, which is clear-sighted in one sense, and blind in another, might in some cases be too severe. But as we have already observed, the national judges are no more than the mouth that pronounces the words of the law, mere passive beings incapable of moderating either its force or rigor.

Let us consider for a moment the construction of an operation in C++26’s

std::execution (hereafter exec).1 In such a construction we need several

things: we need a sender, the source of an operation; and a receiver, the

sink of an operation. We combine them with exec::connect(sender, receiver) to

form an operation state (op_state).

The resulting object captures the idea of work, but does not induce work by

construction. Work begins only when we enter an operation state with

exec::start(op_state). For simple programs2 the tree-shaped3 collection

of operation states is fixed by composition.4 The density of footnotes in the

previous sentence is sufficient evidence that we ought not linger here, for fear

of foundering amongst them.

This static tree-shape of operation states is insufficient for more complex programs where we need a runtime-determined number of operations in flight concurrently. We materialize this via dynamic operation state nesting, introducing new child operation states within the active lifetime of parent operation states.

We describe this style of composing static and dynamic operation states as structured concurrency. Atop this paradigm, we often layer the requirement that all children must finish their active lifetimes, must exit their receiver’s continuation, prior to their parent doing the same. Let’s call this the strong structured concurrency invariant.6

But this is C++ we’re talking about. The invariant is sound; the language is not. Let’s proceed.

The Problem

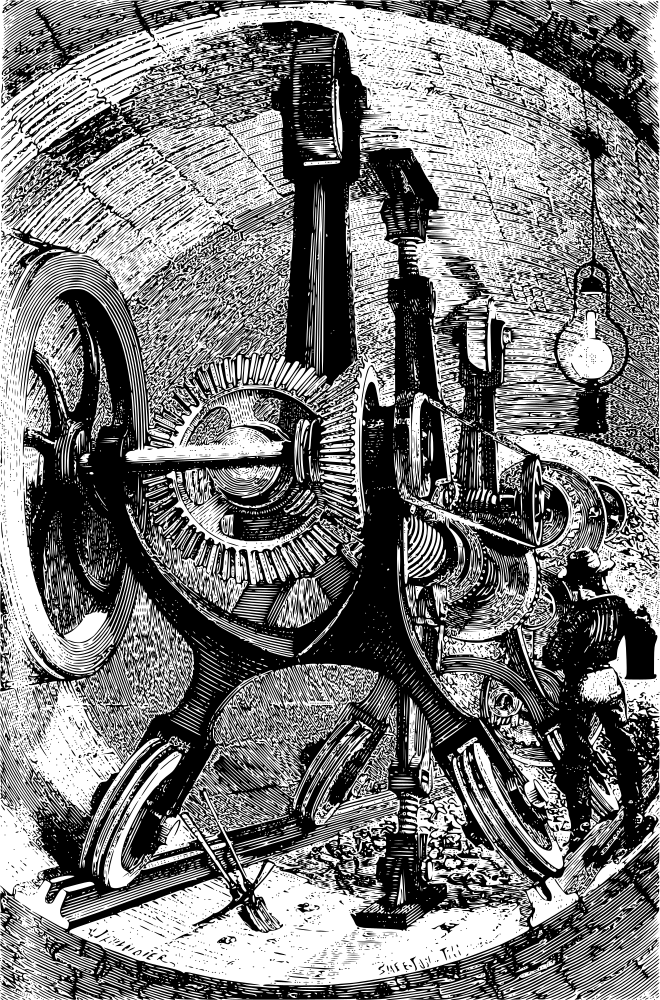

We need some arbitrary, though plausible, shape for an operation state tree. Figure 1 provides this shape. It is contrived, but anyone who has written a client-server networking service will hopefully recognize a familiar pattern here.7

There is, of course, nothing concurrent about this. Easily verified by looking at the output:

Running main on thread: 0

Running doer on thread: 0

Running doer on thread: 0

Running doer on thread: 0

Running doer on thread: 0

Got 4 work results in 8.0s

Results: [0, 1, 2, 3]

Four tasks run sequentially on the same thread, each taking two seconds. No

surprises there. We could write nearly the exact same code using regular

functions and be better off for it. In order for meaningful concurrency to

happen we need to either provide a mechanism for work_doer to suspend while

waiting for its “work” (sleep_for) to end, or we can use parallelism.

The former is more interesting, but the latter is faster to implement; consider Figure 2.

Alas, we have made it worse:

Running main on thread: 0

Running doer on thread: 1

Running doer on thread: 2

Running doer on thread: 3

Running doer on thread: 4

Got 4 work results in 8.2s

Results: [0, 1, 2, 3]

We have achieved neither parallelism nor concurrency: the work_launcher loop

co_awaits each work_doer to completion before starting the next. All we have

purchased is scheduling overhead.

We need a mechanism to start work without also waiting on that work to complete.

This operation is usually called spawn, and in stdexec it is implemented by

exec::async_scope.8 We will avail ourselves of this mechanism in

Figure 3, and finally conquer the tyranny of serial execution.

The results speak for themselves:

Got 4 work results in 2.0s

We have achieved concurrent work_doer execution (via parallelism), producing a

4x speedup in simulated workloads. I will accept my Turing award at the earliest

convenien–

Results: [144536, 0, -1919962110, -1156215799]

Ah. Monkey balls.

The Wrong Solution

Many will have spotted the bug immediately (bravo!). For the remainder fear not: sanitizers find this like fingertips find papercuts.

The bug exists in the relationship between work_launcher and its children, the

work_doers. The input data& to work_doer is a borrowed reference from

work_launcher’s frame. Having spawned the work_doers, work_launcher

promptly exits said frame and the reference is left to dangle.

In an unstructured concurrency world, the usual fix is to ensure that spawned

work has some stake in the ownership of its inputs. This leads to one of two

familiar patterns, transfer ownership (std::move) or share ownership

(std::shared_ptr).

std::shared_ptr is unfashionable but still serviceable when an object has no

natural owner. Our program does not have this problem, ownership of each datum

can move cleanly from parent to child. We can fix the bug by switching to

pass-by-value and moving input data into work_doer, which we illustrate in

Figure 4.

Job done right? Well, even on its best day, std::move() is not free. At best

it is a smaller memcpy()9 plus whatever bookkeeping is required to leave the

source in a valid state. There is no such thing as a free memcpy();10 some

bytes still have to move, even if it’s just a couple pointers. Once you “fix”

lifetimes by ownership transfer, you pay this tax at every spawn boundary.

Worrying about that might be premature optimization. The real costs are those

of flexibility and reasoning. To transfer ownership, ownership must be

transferable. The data we can “fix” with this mechanism must be move

constructible and at least relatively cheap to move (no

std::array<char, 4096>). Moreover, trying to explicitly reason about object

ownership and lifetimes is a layer of complexity we should not engage with

unless absolutely necessary.

The Right Solution

The truth is this is not a lifetime bug, it’s a scoping bug. This code violates

the strong structured concurrency invariant, and all other problems flow

from this violation. The work_launcher is the operation which introduces the

dynamic fanout, therefore it must also be the operation which contains it.

If we move async_scope inside work_launcher and do not let work_launcher

return until the scope is empty, the bug disappears. inputs may remain a

simple local vector, and the children may borrow from it without any

further contortions. Figure 5 illustrates this.

Got 4 work results in 2.0s

Results: [0, 1, 2, 3]

Triumph.

Coda

The alternative title for this post was Nursery Sharing Considered Harmful,

but I couldn’t bring myself to the cliche. Allowing nurseries (eg,

async_scope) to cross task boundaries is a contentious but ubiquitous

capability of structured concurrency implementations. It is a required, or at

least entirely natural, mechanism to tackle some problems.11

The tension comes in the guarantees we want from structured concurency. For the original formulation of a structured approach to error propagation and cancellation handling, nursery sharing is not a major obstacle to program correctness. However, this approach is most popular in languages with reference counted or borrow-checked object lifetimes. C++ is not that.

Strong structured concurrency provides a mechanism to further lighten the cognitive load associated with authoring concurrent programs. In C++, a shared nursery is not a convenience; it is an architectural boundary. We should treat it like one.

-

execis also used by several extensions from thestdexecreference implementation used in this post. No attempt is made to distinguish these. ↩︎ -

“Simple” here does not mean small, trivial, or comprehensible to the average bear. It means only that we can speak about the bounds of the operation from the manner of its assembly, without needing to analyze the internal behavior of the work it performs (its loops, its blocking, its I/O peculiarities, and so on). ↩︎

-

Strictly speaking, not always a tree. Some adapters introduce shared state (multiple downstream operations sharing a single upstream completion), which makes the dependency shape a DAG. Nothing in this post depends on the distinction; “tree-shaped” is the intuition we will keep. ↩︎

-

That is: the space of possible operation-state types is determined by the composed sender expression. Which particular operation-state objects are instantiated, and which are ever started, is a runtime affair5 and varies with control flow; we will treat that as beneath the waterline. ↩︎

-

…and thus fit only for footnotes. ↩︎

-

In its strong form, structured concurrency is a reification of the fully strict fork-join discipline from programming language theory. The more general form of structured concurrency is much younger than the PLT notion. It was first described by Sústrik, and subsequently expanded on by Smith, and Niebler. ↩︎

-

I’m going to use coroutines to play both the

senderandreceiverroles in the examples.exec::taskis a coroutine type that is also usable as a sender. When youco_awaitasenderinside atask, the coroutine’s promise provides the glue such that thesendercan deliver a completion back into the coroutine.The details are not important here; the examples are meant to illustrate lifetime and structuring issues, not the coroutine plumbing. ↩︎

-

In structured concurrency parlance this is a nursery, because it spawns a forest of task trees (aren’t we clever?). A standardized variant exists in

std::executionascounting_scope. ↩︎ -

Pedant’s corner: by

memcpy()I mean “some bounded amount of state must be transferred.” For many handle types this is a few machine words copied via some mechanism; for others (due to allocator quirks, syscalls, etc.) it is more involved in ways that are even more performance intensive than the best case. Either way, it is never “free” in the sense a borrowed reference is. ↩︎ -

…except for all the cases where it is free. ↩︎

-

See Sústrik, “Two Approaches to Structured Concurrency”. ↩︎